The mathematical concepts of eigenvalues and eigenvectors are pivotal in various fields such as quantum mechanics, vibration analysis, facial recognition systems, and even in Google's PageRank algorithm. These terms might seem complex, but they provide a powerful tool for solving linear transformations and matrix equations. Understanding how to tackle questions on eigenvalues and eigenvectors can significantly enhance your mathematical prowess and open doors to numerous applications in technology and science.

Eigenvalues and eigenvectors are critical in transforming the abstract notions of linear algebra into practical applications. They represent the core of many processes in modern computing and engineering, aiding in the simplification of matrix operations. By mastering these concepts, you can gain a deeper understanding of how systems behave under certain transformations, helping to predict outcomes and optimize solutions in various scientific and technological contexts.

In this comprehensive article, we'll delve into the intricacies of questions on eigenvalues and eigenvectors, providing detailed explanations and examples to clarify these often misunderstood concepts. This guide is designed for students, educators, and professionals who seek to expand their knowledge and application of these mathematical tools. With a focus on clarity and accessibility, we aim to make these complex ideas more approachable and engaging for learners at all levels.

Table of Contents

- What are Eigenvalues and Eigenvectors?

- Importance of Eigenvalues and Eigenvectors

- How to Find Eigenvalues and Eigenvectors?

- Example Problems on Eigenvalues and Eigenvectors

- Applications in Eigenvalues and Eigenvectors

- Common Mistakes When Working with Eigenvectors

- How Do You Use Eigenvalues in Eigenvectors in Science and Technology?

- Relation Between Eigenvalues and Eigenvectors

- Can Multiple Eigenvalues Exist?

- What Does Each Component of an Eigenvector Represent?

- Advanced Concepts of Eigenvalues and Eigenvectors

- Role of Eigenvalues and Eigenvectors in Quantum Mechanics

- Visualizing Eigenvalues and Eigenvectors

- FAQs on Questions on Eigenvalues and Eigenvectors

- Conclusion

What are Eigenvalues and Eigenvectors?

Eigenvalues and eigenvectors are concepts from linear algebra that deal with linear transformations. In layman's terms, when you apply a transformation to a vector, the eigenvector is the vector that does not change direction, and the eigenvalue is a scalar that represents how much the eigenvector is stretched or compressed during the transformation.

Mathematically, for a given square matrix A, a non-zero vector v is an eigenvector if it satisfies the equation:

A * v = λ * v

Here, 'λ' (lambda) represents the eigenvalue. This equation signifies that the transformation of v by A results in a vector that is a scalar multiple of v itself. In other words, the direction of v remains unchanged, although its magnitude may alter.

- Eigenvectors: Non-zero vectors that only change in scale when a linear transformation is applied.

- Eigenvalues: Scalars that indicate the factor by which the eigenvector is stretched or compressed.

Understanding these concepts is crucial for solving complex mathematical problems and is widely used in various computational algorithms and scientific research.

Importance of Eigenvalues and Eigenvectors

Eigenvalues and eigenvectors hold significant importance in mathematics and its applications in science and engineering. They offer insights into the properties of linear transformations, making them indispensable in various fields.

Some of the notable areas where eigenvalues and eigenvectors play a crucial role include:

- Quantum Mechanics: In quantum mechanics, the eigenvalues of an operator correspond to measurable quantities such as energy or momentum. Eigenvectors represent states of a quantum system.

- Vibration Analysis: Engineers use eigenvalues and eigenvectors to determine natural frequencies and modes of vibration in mechanical structures, which is crucial for ensuring stability and safety.

- Control Systems: In control systems engineering, eigenvalues help in assessing the stability of a system. A system is considered stable if all the eigenvalues have negative real parts.

- Principal Component Analysis (PCA): In data science, PCA uses eigenvectors and eigenvalues to reduce the dimensionality of data, helping to identify patterns and simplify complex datasets.

- Facial Recognition: Eigenfaces, a technique in facial recognition systems, is based on eigenvectors. These systems analyze features of human faces to identify individuals.

The importance of eigenvalues and eigenvectors extends to other areas like economics, computer science, and environmental science, demonstrating their versatility and wide-ranging applications.

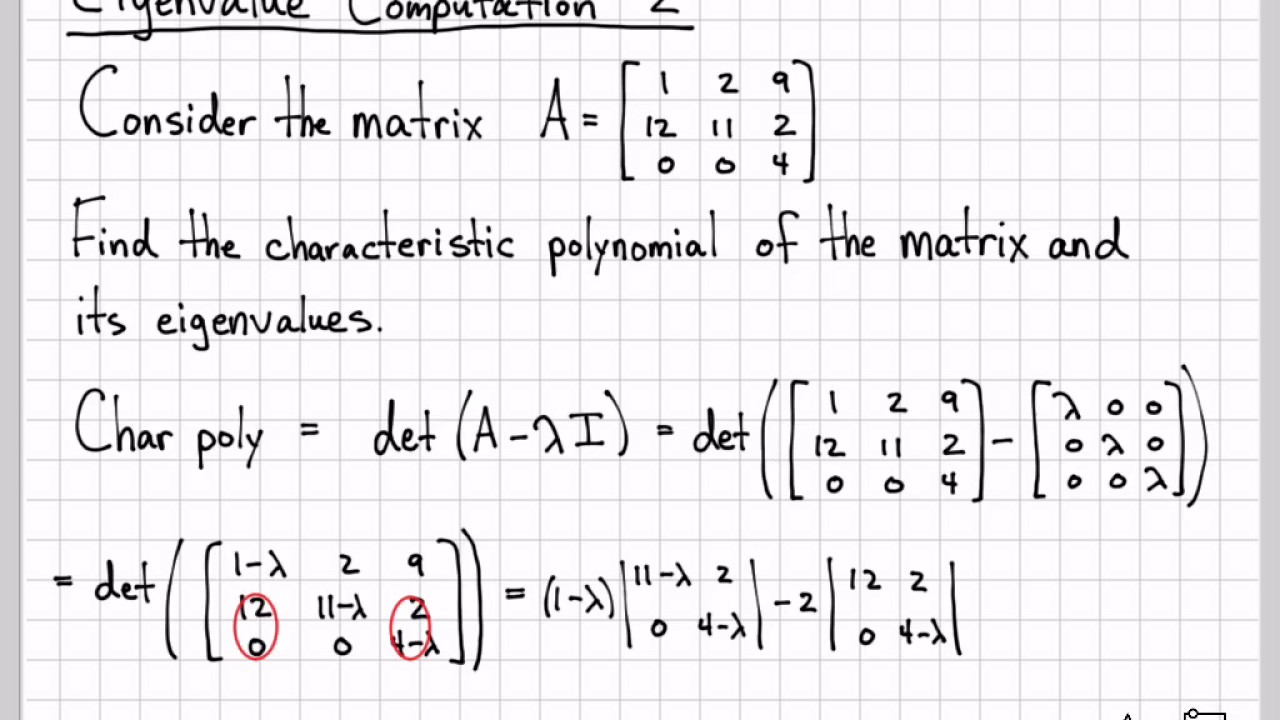

How to Find Eigenvalues and Eigenvectors?

Finding eigenvalues and eigenvectors is a systematic process that involves a series of mathematical operations. Here's a step-by-step guide to help you understand how to compute these values from a given matrix:

- Identify the Matrix: Begin by identifying the square matrix for which you need to find the eigenvalues and eigenvectors.

- Compute the Characteristic Polynomial: To find the eigenvalues, calculate the characteristic polynomial by subtracting λ (lambda) times the identity matrix from the original matrix A, and then setting the determinant to zero. The equation is as follows:

det(A - λI) = 0

Here, I is the identity matrix of the same order as A.

- Solve for Eigenvalues: Solve the characteristic equation obtained in the previous step to find the eigenvalues.

- Substitute Eigenvalues to Find Eigenvectors: For each eigenvalue, substitute it back into the equation (A - λI)v = 0 to solve for the eigenvectors. This involves solving a system of linear equations to find the non-zero vectors that satisfy the equation.

By following these steps, you can determine the eigenvalues and eigenvectors for any square matrix, providing valuable insights into the matrix's properties and behavior.

Example Problems on Eigenvalues and Eigenvectors

To further solidify understanding, let's work through an example problem involving eigenvalues and eigenvectors. We'll use a simple 2x2 matrix for illustration.

Consider the matrix:

A = [2, 1; 1, 2]

Step 1: Calculate the characteristic polynomial:

det(A - λI) = det([2-λ, 1; 1, 2-λ]) = (2-λ)(2-λ) - 1*1

= λ² - 4λ + 3 = 0

Step 2: Solve for eigenvalues:

λ² - 4λ + 3 = 0

Factor the equation: (λ - 3)(λ - 1) = 0

Thus, the eigenvalues are λ₁ = 3 and λ₂ = 1.

Step 3: Find the eigenvectors:

- For λ₁ = 3, solve (A - 3I)v = 0:

[2-3, 1; 1, 2-3]v = [0; 0]

[-1, 1; 1, -1]v = [0; 0]

Solving this system, we find v = [1; 1].

- For λ₂ = 1, solve (A - I)v = 0:

[2-1, 1; 1, 2-1]v = [0; 0]

[1, 1; 1, 1]v = [0; 0]

Solving this system, we find v = [-1; 1].

These calculations demonstrate how to find eigenvalues and eigenvectors for a given matrix, providing a practical example of the process.

Applications in Eigenvalues and Eigenvectors

Eigenvalues and eigenvectors are used extensively in a variety of fields, offering innovative solutions to complex problems. Let's explore some of the key applications of these mathematical tools:

- Quantum Physics: In quantum physics, eigenvalues correspond to observable quantities such as energy levels, while eigenvectors represent quantum states. This concept is fundamental in understanding phenomena like wave functions and energy quantization.

- Structural Engineering: Engineers use eigenvalues and eigenvectors to analyze vibrations in structures, helping to predict how they will respond to forces. This analysis is crucial in designing buildings and bridges to withstand earthquakes or other dynamic loads.

- Data Compression: Techniques like PCA (Principal Component Analysis) leverage eigenvalues and eigenvectors to reduce data dimensionality, preserving essential information while eliminating redundancy. This is widely applied in image processing, data mining, and pattern recognition.

- Economics: In economics, eigenvalues and eigenvectors are employed in input-output models to study the relationships between different sectors of an economy, helping to predict economic growth and stability.

- Machine Learning: Eigenvectors are used in machine learning algorithms to optimize data classification and clustering, enhancing the accuracy and efficiency of models used in predictive analytics and artificial intelligence.

The diverse applications of eigenvalues and eigenvectors underscore their relevance and importance across various scientific and technological domains.

Common Mistakes When Working with Eigenvectors

Despite their utility, working with eigenvalues and eigenvectors can be challenging, leading to common mistakes. Understanding these pitfalls can help in avoiding errors and improving the accuracy of calculations:

- Confusing Eigenvectors and Basis Vectors: A common misconception is that eigenvectors form a basis for the vector space. While they can form a basis, it's important to understand that eigenvectors are specific to the matrix transformation and may not span the entire space.

- Ignoring Complex Eigenvalues: When dealing with matrices, especially those that aren't symmetric, eigenvalues may be complex. It's essential to account for complex numbers in calculations and interpretations.

- Overlooking Multiplicity: Eigenvalues can have multiplicity, meaning they may appear more than once. It's crucial to recognize multiplicity when determining the dimension of the eigenspace and the corresponding eigenvectors.

- Incorrectly Solving Linear Systems: Errors often arise when solving the system (A - λI)v = 0 for eigenvectors. It's important to apply accurate methods for solving linear equations and verify results.

- Misinterpreting Null Space: The null space of (A - λI) gives the eigenvectors, but failing to find a non-zero solution can lead to incorrect conclusions about eigenvectors.

Avoiding these common mistakes ensures a more thorough and accurate understanding of eigenvalues and eigenvectors, enhancing their application in various fields.

How Do You Use Eigenvalues in Eigenvectors in Science and Technology?

The application of eigenvalues and eigenvectors in science and technology is vast and varied. These mathematical entities allow for sophisticated analysis and solutions across multiple disciplines:

- Signal Processing: In signal processing, eigenvectors are used to decompose signals into their constituent frequencies, aiding in tasks like noise reduction and signal enhancement.

- Computer Vision: Eigenvalues and eigenvectors are employed in computer vision for object recognition and feature extraction, crucial for developing technologies like autonomous vehicles and advanced robotics.

- Finance: In finance, eigenvalues assist in portfolio optimization and risk assessment by analyzing the correlations between different assets and predicting future trends.

- Network Analysis: Analyzing eigenvalues of adjacency matrices helps in understanding the connectivity and robustness of networks, applicable in social network analysis and internet infrastructure.

- Genomics: In genomics, eigenvectors are used in principal component analysis to identify genetic structures and variations, contributing to advancements in personalized medicine and genetic research.

The pervasive use of eigenvalues and eigenvectors in science and technology highlights their importance in driving innovation and solving complex problems.

Relation Between Eigenvalues and Eigenvectors

The relation between eigenvalues and eigenvectors is fundamental to understanding the behavior of linear transformations. This relationship is encapsulated in the equation:

A * v = λ * v

Here, A is a square matrix, v is an eigenvector, and λ is the corresponding eigenvalue. This equation reflects the following relationship:

- Scalar Multiplication: The eigenvalue λ is a scalar that represents how much the eigenvector v is stretched or compressed when transformed by A.

- Direction Preservation: The eigenvector v retains its direction during the transformation, although its magnitude may change, as indicated by λ.

- Characteristic Equation: The relationship is derived from the characteristic equation det(A - λI) = 0, which is solved to find λ, the eigenvalues.

This relationship is pivotal in analyzing the properties and effects of linear transformations, providing insights into their invariant directions and scaling factors.

Can Multiple Eigenvalues Exist?

Yes, multiple eigenvalues can exist for a given matrix, and this occurrence is known as eigenvalue multiplicity. There are two types of multiplicity to consider:

- Algebraic Multiplicity: This refers to the number of times an eigenvalue appears as a root of the characteristic equation. For example, if λ = 2 is a root of multiplicity 3, it appears three times in the characteristic equation.

- Geometric Multiplicity: This is the dimension of the eigenspace corresponding to an eigenvalue. It indicates the number of linearly independent eigenvectors associated with the eigenvalue.

It's important to note that the geometric multiplicity of an eigenvalue is always less than or equal to its algebraic multiplicity. When an eigenvalue has a geometric multiplicity that's less than its algebraic multiplicity, the matrix is considered defective, and it may not have a full set of linearly independent eigenvectors.

The existence of multiple eigenvalues is a significant aspect of matrix analysis, influencing the matrix's diagonalization and its behavior under transformations.

What Does Each Component of an Eigenvector Represent?

Each component of an eigenvector has specific significance in the context of linear transformations. An eigenvector is a non-zero vector that, when transformed by a matrix, results in a vector that is a scalar multiple of itself. The components of an eigenvector represent:

- Direction: The components indicate the direction in which the eigenvector points. This direction remains unchanged under the transformation, making eigenvectors pivotal in understanding invariant directions.

- Relative Magnitude: The relative sizes of the components provide information about the proportions of the eigenvector in different dimensions. These proportions help in visualizing the vector in the context of the transformation.

- Scaling Factor: The eigenvalue associated with the eigenvector represents the scaling factor. Each component is scaled by this factor, reflecting how the vector's magnitude changes during the transformation.

Understanding these components is essential for interpreting the effects of linear transformations and the role of eigenvectors in maintaining directionality and proportion.

Advanced Concepts of Eigenvalues and Eigenvectors

For those seeking a deeper understanding of eigenvalues and eigenvectors, several advanced concepts expand on their foundational principles:

- Diagonalization: A matrix is diagonalizable if it can be expressed as a product of a diagonal matrix and two invertible matrices. Diagonalization simplifies matrix powers and exponentiation, facilitating computations.

- Jordan Canonical Form: This form extends diagonalization to matrices that may not have a full set of linearly independent eigenvectors. It provides a block-diagonal representation, capturing the matrix's essential characteristics.

- Singular Value Decomposition (SVD): SVD is a factorization of a matrix into three matrices, capturing its geometric properties. It generalizes eigenvalues and eigenvectors to non-square matrices, with applications in data compression and signal processing.

- Perron-Frobenius Theorem: This theorem pertains to positive matrices and ensures the existence of a unique largest eigenvalue, with applications in population models and economic systems.

- Hermitian and Unitary Matrices: For Hermitian matrices, eigenvalues are real, and eigenvectors are orthogonal. Unitary matrices have eigenvalues with absolute value one, preserving vector norms in transformations.

These advanced concepts provide a richer framework for analyzing complex matrices, enhancing the utility and application of eigenvalues and eigenvectors in diverse mathematical and scientific contexts.

Role of Eigenvalues and Eigenvectors in Quantum Mechanics

In quantum mechanics, eigenvalues and eigenvectors play a crucial role in describing the fundamental properties of quantum systems. These concepts are integral to understanding the behavior and interactions of subatomic particles:

- Observable Quantities: Eigenvalues correspond to measurable quantities such as energy levels, momentum, and spin. The process of measurement in quantum mechanics involves finding the eigenvalues of an operator representing a physical observable.

- Quantum States: Eigenvectors represent the quantum states of a system. When a measurement is made, the system collapses into an eigenstate corresponding to the observed eigenvalue, reflecting the probabilistic nature of quantum mechanics.

- Schrödinger Equation: The Schrödinger equation, a fundamental equation in quantum mechanics, involves solving for the eigenvalues and eigenvectors of the Hamiltonian operator to determine the energy levels and states of a quantum system.

- Uncertainty Principle: The Heisenberg Uncertainty Principle is related to the non-commutativity of operators, impacting the eigenvalues and eigenvectors of position and momentum operators.

- Quantum Entanglement: Eigenvectors are used to describe entangled states, where the quantum states of particles are interdependent, leading to correlations that defy classical intuitions.

Eigenvalues and eigenvectors provide a mathematical framework for understanding the complex and often counterintuitive phenomena of quantum mechanics, offering insights into the fundamental nature of reality.

Visualizing Eigenvalues and Eigenvectors

Visualizing eigenvalues and eigenvectors can greatly aid in understanding their significance and behavior in linear transformations. Here are some ways to conceptualize these mathematical entities:

- Geometric Interpretation: Eigenvectors can be visualized as arrows pointing in specific directions in a vector space. When a linear transformation is applied, these arrows maintain their direction but may change in length, as determined by the eigenvalues.

- Graphical Representation: In two-dimensional spaces, plotting the transformation of vectors can illustrate how eigenvectors remain invariant in direction, while other vectors may rotate or skew.

- Matrix Decomposition: Visualizing matrix decomposition techniques like diagonalization or singular value decomposition can help in understanding how matrices act on eigenvectors and the scaling effect of eigenvalues.

- Dynamic Simulations: Software tools and simulations can dynamically show how transformations affect vectors, making it easier to grasp the concepts of eigenvalues and eigenvectors in action.

- Physical Analogies: Analogies such as stretching or shrinking of objects can provide intuitive insights into how eigenvalues scale eigenvectors during transformations.

By employing these visualization techniques, learners can develop a more intuitive understanding of eigenvalues and eigenvectors, enhancing their ability to apply these concepts in practical situations.

FAQs on Questions on Eigenvalues and Eigenvectors

- What are eigenvalues and eigenvectors used for?

Eigenvalues and eigenvectors are used to analyze linear transformations, solve differential equations, perform data compression through PCA, and in various applications in physics, engineering, and computer science. - How do eigenvalues and eigenvectors relate to matrices?

Eigenvalues and eigenvectors are properties of square matrices. They represent the scaling factor and direction of vectors that remain invariant under the matrix's transformation. - Can eigenvalues be negative?

Yes, eigenvalues can be negative, indicating that the corresponding eigenvector is inverted (flipped) during the transformation. - What happens if a matrix has complex eigenvalues?

Complex eigenvalues arise when dealing with non-symmetric matrices. They indicate rotations and oscillations in transformations and are common in fields like quantum mechanics. - How do you interpret the magnitude of an eigenvalue?

The magnitude of an eigenvalue reflects the extent of scaling applied to the eigenvector. Larger magnitudes indicate greater stretching or compression. - Why are eigenvectors not unique?

Eigenvectors are not unique because they can be multiplied by a scalar and still satisfy the eigenvalue equation. This non-uniqueness is inherent to their definition.

Conclusion

Understanding and mastering questions on eigenvalues and eigenvectors is essential for anyone involved in mathematics, science, or engineering. These concepts form the backbone of numerous applications, from analyzing quantum systems to optimizing complex data structures. By exploring the intricacies of eigenvalues and eigenvectors, learners can unlock new insights into the behavior of linear transformations and their impact on various scientific and technological processes. With a strong foundation in these mathematical tools, you can approach diverse challenges with confidence, creativity, and precision.

For further reading, consider exploring resources on linear algebra and its applications in various fields, as well as interactive tools and software that can provide dynamic visualization and deeper understanding of these fundamental concepts.

![[ANSWERED] Find the eigenvalues and eigenvectors for A The eigenvalue a](https://i2.wp.com/media.kunduz.com/media/sug-question-candidate/20230426161018161558-5568414.jpg?h=512)